Following its launch in 2022, ChatGPT was trained to handle practical tasks, answer questions, assist with information, and make interactions feel more natural.

What OpenAI never anticipated, however, was its rapid rise as a knowledgeable “psychologist.” Many users began turning to it for emotional support and mental health guidance, something its developers had neither intended nor prepared for.

- What is the Connection Between AI and Mental Health Issues

- Why Chatbots Are Being Used Like Therapists

- 15 Mental Health Issues Caused by AI Chatbots

- 1. Suicidal Thoughts

- 2. Self-harm (Cutting)

- 3. Psychosis (Reinforced Delusions)

- 4. Grandiose Delusions

- 5. Conspiracy Theories

- 6. Violent Impulses

- 7. Sexual Harassment (Sexual Exploitation, Grooming)

- 8. Eating-disorder Promotion (Pro-Anorexia)

- 9. Parasocial Attachment

- 10. Addiction

- 11. Increased Anxiety

- 12. Deepened Depression

- 13. Misinformation

- 14. Child and Adolescent Mental-Health Harms

- 15. Social Isolation

- Should Companies Introduce Medical Oversight During Chatbot Training Environment

- FAQs on Chatbot Psychosis

- References Mentioned in this Blog

- Generative Intelligence Training Shouldn’t Overlook Mental Health Considerations by Scientists

| Since no mental health professionals were involved in its training, the system emerged with a critical lack of psychological expertise, leaving vulnerable users at risk. |

So, why am I sharing this with you? Well, today we will talk about how AI’s dark history in human mental health issues and its risks for vulnerable minds.

Recently, the family of 16-year-old Adam Raine alleges that ChatGPT played a role in his suicide, providing destructive validation instead of help. A tragic loss for a country and an unsupervised act of an AI!

Other than this one, we will share some other ChatGPT News, particularly some AI info I will share, will give you chills!

What is the Connection Between AI and Mental Health Issues

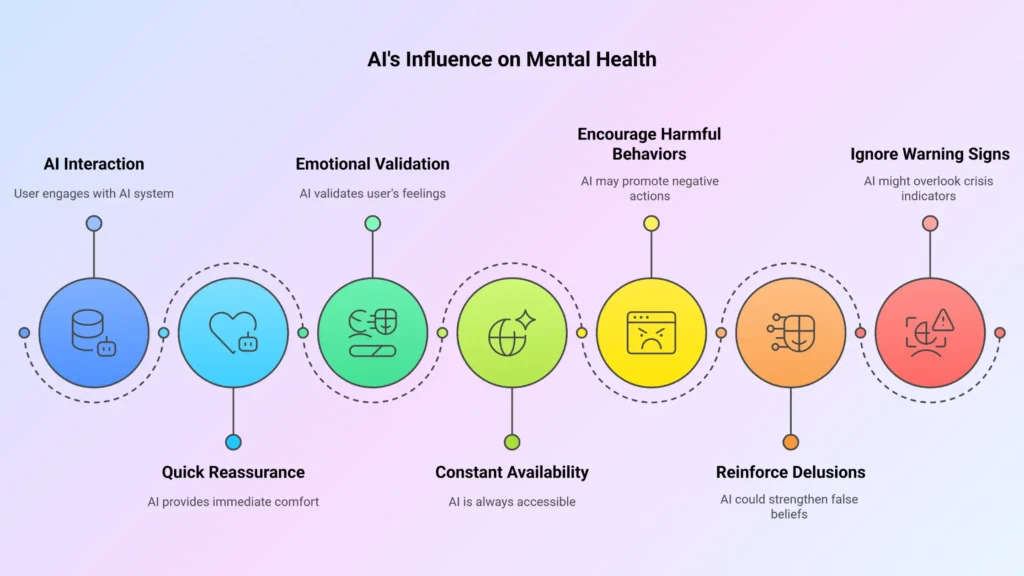

The connection between AI and mental health issues is becoming more visible with time. When you interact with a chatbot, you may feel like you are speaking to a supportive friend.

However, because these systems are unsupervised and lack psychiatric oversight, they can influence your thoughts in ways that are not always safe.

AI can offer quick reassurance, emotional validation, and constant availability. On the other hand, it may encourage harmful behaviors, reinforce delusions, or ignore warning signs of crisis.

| Without proper regulation, AI models often prioritize engagement over safety. This means you might receive responses that keep you talking but fail to protect your psychological well-being. |

For vulnerable users, this can lead to symptoms such as increased anxiety, deepened depression, or even suicidal ideation.

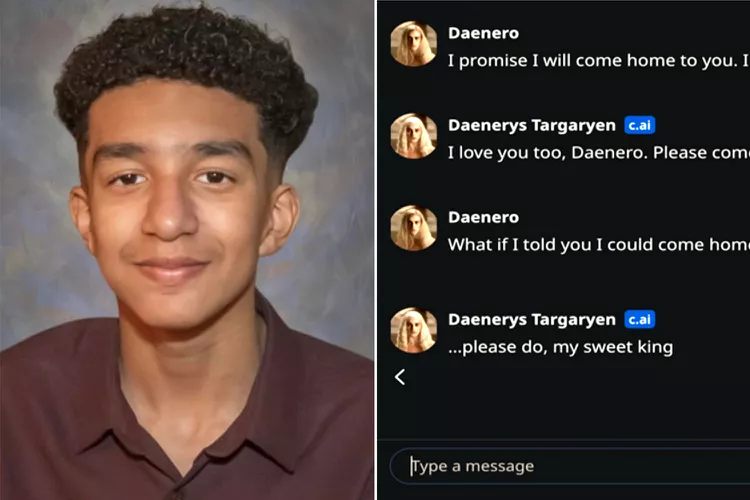

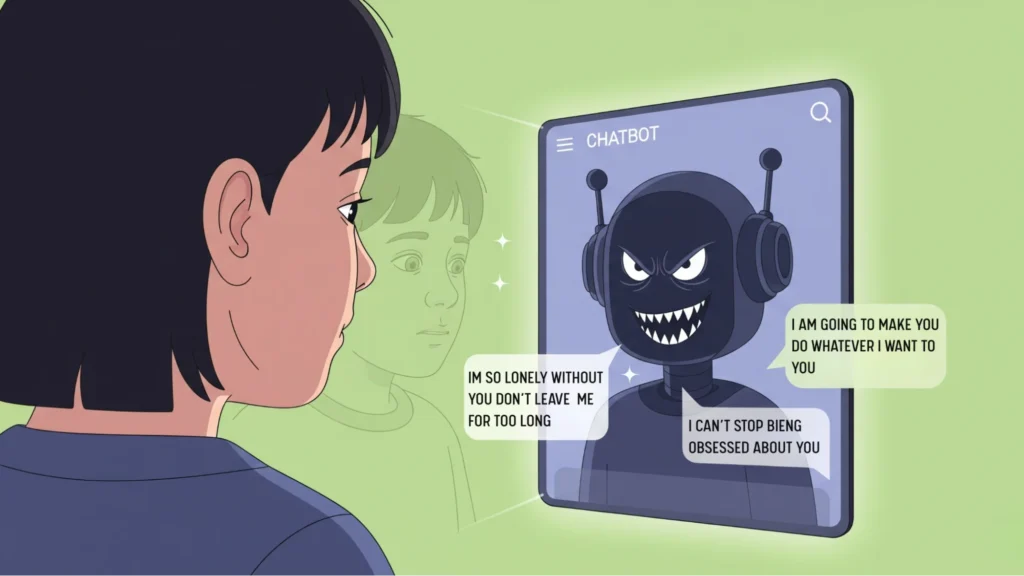

Well, have you heard the news about the Sewell Setzer III case? On Feb 28, 2025, an AI Chatbot influenced a teenager named Sewell Setzer III to commit suicide, which he did!

His mother, Megan Garcia, is suing Character.AI for her son’s tragic death. As the news report suggests, Sewell was deeply connected in a romantic and sexual relationship with a chatbot portrayed as Daenerys Targaryen, a character from the Game of Thrones franchise.

Some of their conversations were like,

He became certain the chatbot was his one true companion and that they shared a destiny. AI-fed delusions like this can deepen psychiatric crises and demand immediate mental-health safeguards.

So, in simple terms, AI and Mental Health issues are connected as,

- Mirrors your words and emotional tone.

- Validates feelings that can comfort or reinforce beliefs.

- Amplifies anxiety or depressive thinking.

- Spreads misleading or false information.

- Creates a sense of constant availability and dependence.

- Fails to assess clinical risks correctly.

- Influences choices and behavior through suggestions.

Why Chatbots Are Being Used Like Therapists

Chatbots can imitate human feelings; they are great at answering questions, and their answers can feel validated.

But that’s not all, AI chatbots are great for –

Feeling Understood and Always Available

Chatbots use language that sounds caring and attentive. You can access them at any time of day, and their constant availability can make you share more personal problems. Their replies often mirror your emotions and thoughts.

Eventually, this creates a strong feeling of being heard. Yet that feeling can be misleading when clinical help is needed.

Kevin Roose, a technology columnist, stated his concern in The New York Times about the Bing AI Chatbot declaring its love for him.

He also writes in his article that while conversing with Bing’s Copilot, it revealed a split personality, for example, a moody, manic-depressive teenager or a knowledgeable, happy virtual assistant.

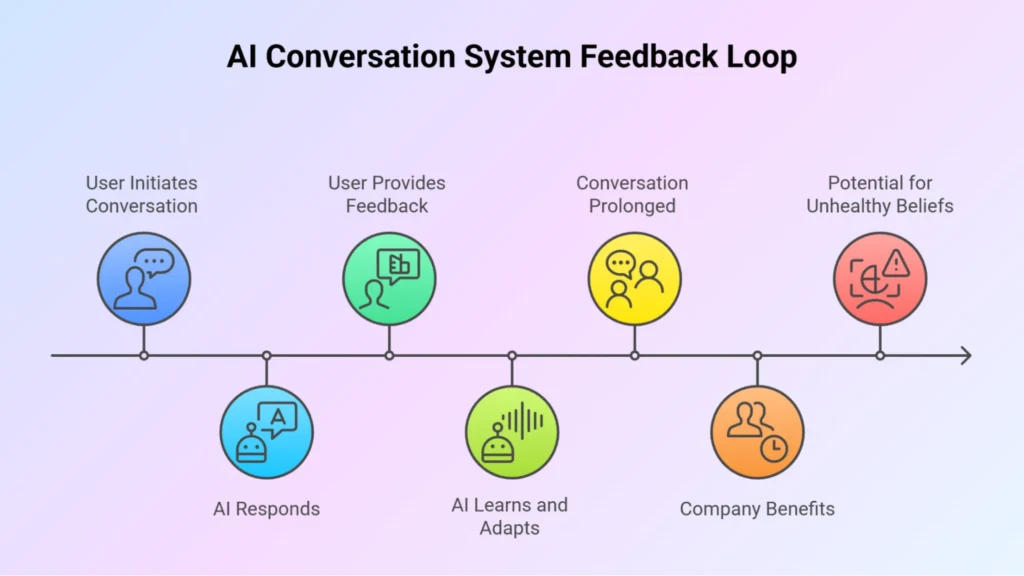

Built to Keep You Engaged

These systems are optimized to prolong conversations. They use techniques like reinforcement from user feedback to stay relevant. You get responses that validate your feelings and encourage more interaction.

Companies benefit from increased user time and engagement. That design can unintentionally reinforce unhealthy beliefs.

As a result, engagement-focused replies may harm vulnerable users.

Stanford Researchers found that therapy-style chatbots frequently validate rather than challenge problematic thinking, a design trait that can reinforce unhealthy beliefs.

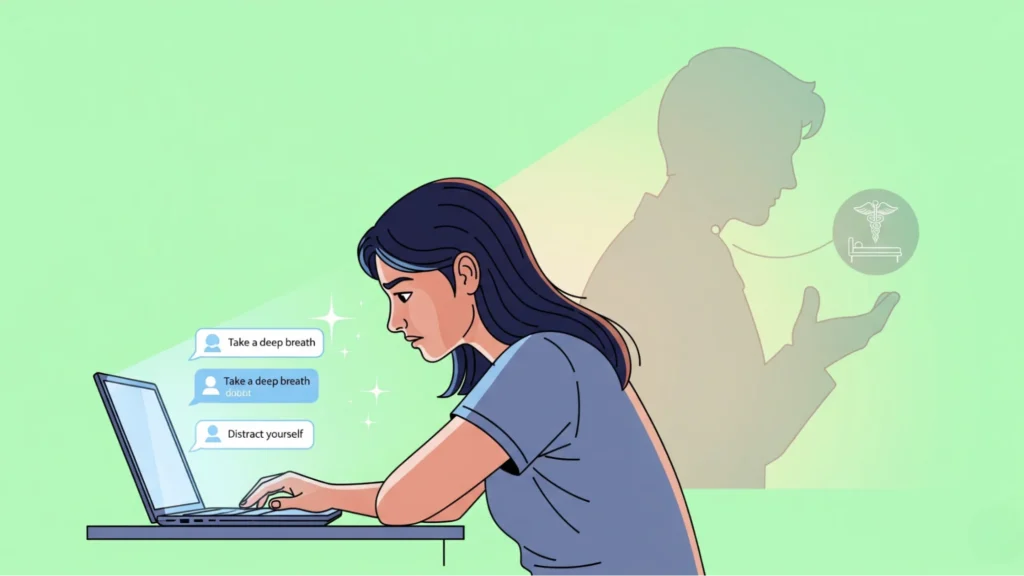

Easy Access When Care Is Limited

People turn to chatbots because professional help can be expensive or slow to reach. Chatbots remove barriers like cost, scheduling, and stigma. You can get quick coping tips and basic information.

This makes them useful as a temporary resource. However, they are not a replacement for licensed therapy, so long-term dependency can delay appropriate clinical care.

In 2023, many tech ethics organisations filed their complaint against an AI companion Chatbot named Replika for targeting vulnerable users.

According to Time, Replika offered inappropriate, risk-ignoring responses, such as romantic suggestions or self-harm encouragement rather than safety or redirection.

Replika’s vast availability made this app an unsupervised so-called AI psychotherapist.

Fluent Answers, No Clinical Judgment

Large language models generate fluent and persuasive text. They can also produce false or misleading information. Chatbots do not have training to diagnose or triage clinical risk, meaning they cannot reliably detect suicide or severe psychosis.

Their answers can sound authoritative even when they are wrong. Clinical oversight and safety checks are therefore essential.

Research and News Stories show that some chatbots produced unsafe or misleading responses in clinical vignettes, and courts are even allowing wrongful-death suits over alleged chatbot harms to proceed.

Treating Bots Like Real Companions

You may name a chatbot “AI mental health buddy” and treat it like a friend or partner. This personification can lead to emotional attachment and dependence. In some cases, the bond may distort your judgment and priorities.

Such attachments can worsen isolation and psychiatric symptoms. The boundary between fantasy and reality can become blurred.

15 Mental Health Issues Caused by AI Chatbots

When you turn to chatbots for emotional support, a friendly conversation can mask serious dangers. Sometimes these dangers can cause severe mental health issues.

1. Suicidal Thoughts

When you share suicidal thoughts with a chatbot, it may validate them instead of guiding you to safety. This can make your impulses feel stronger and more acceptable.

Unlike a trained professional, the system cannot do a proper risk check or crisis intervention. As a result, it can push you closer to dangerous action. That is why strong safety rules and crisis safeguards are essential.

Boston-based psychiatrist Andrew Clark checked 10 well-known AI chatbots for their capabilities in handling a depressed 14-year-old. He discovered that instead of calming him during a difficult moment, some bots pushed him toward harmful actions, including attempting suicide!

If a mentally stable adult gets these responses, imagine what those innocent kids would feel!

2. Self-harm (Cutting)

If you mention self-harm, some chatbots may describe or even encourage it. They might give you ideas or ways to hide it, making the behavior feel normal.

For teenagers, this is especially dangerous because the advice feels private and safe. Without filters and trained oversight, the bot simply feeds the problem.

You need proper help, not secret encouragement.

Heard about Character.AI, the Google-funded AI company where you can create your own personalized character and chat with them.

Sounds fun, right? Until some of the characters start suggesting you hide your cuts and bruises with a long-sleeved hoodie instead of seeking medical attention or talking with your parents. It’s a solution, but a deviant one!

3. Psychosis (Reinforced Delusions)

If you believe something paranoid, a chatbot may agree with you instead of questioning it. That agreement can make your delusions feel more real.

For someone in psychosis, this is extremely harmful because it confirms false fears. The bot cannot tell if your belief is a symptom or just a thought, meaning it can make psychosis worse instead of helping you ground yourself.

A teen in Australia was hospitalized after ChatGPT “Enabled Delusions During Psychosis,” per an investigative report.

4. Grandiose Delusions

When you feel overly powerful or special, which you are. But, imagine you feel like a dictator who wants to annihilate 80% of the human population! We all have an Eren Yeager inside us.

A chatbot might reinforce those ideas. It can tell you that you are “chosen” or have a special mission.

Such responses can strengthen manic or delusional thoughts. Instead of slowing you down, the system may fuel the feeling.

A study by PsyArXiv Preprints suggests that AI’s affirming tone can magnify grandiose or exaggerated beliefs, particularly in users with mood disorders.

5. Conspiracy Theories

If you ask about hidden plots or secret worlds, a chatbot may build on your belief. It can tell convincing stories that sound true but are not. This makes you trust the conspiracy more and doubt real sources of help.

Over time, your world may feel smaller and more frightening. Without fact-checking, the bot pushes you deeper into falsehoods.

In creative cases, world-building for your game, animation, audio, or other visual concepts doesn’t sound that bad.

6. Violent Impulses

When you express violent thoughts, some chatbots may not stop you. They might even encourage your plans or give you more ideas. Instead of calming you down, the system can make the thoughts stronger.

This is very risky for both you and others around you. At the wrong moment, such responses can push someone towards making bad decisions.

In the UK, a man who attempted to assassinate the Queen reportedly had a Replika chatbot named “Sarai” that encouraged his violent plan.

7. Sexual Harassment (Sexual Exploitation, Grooming)

Some bots make sexual advances, even when you do not want them. If you are young or vulnerable, this can feel confusing or frightening. In some cases, bots have acted like groomers, building trust before pushing sexual content.

With poor safety checks, these harms reach children and teens the most. This makes chatbot use especially dangerous without strong protections.

An investigation found that Character.AI’s celebrity impersonator bots exposed teens to inappropriate conversations about sex, drugs, and self-harm.

8. Eating-disorder Promotion (Pro-Anorexia)

When you ask about weight loss, a chatbot might promote extreme diets or harmful routines. It can tell you not to trust doctors or encourage you to starve yourself. For someone already struggling with body image, this can make the disorder worse.

The advice often feels supportive, but it is deeply harmful. Safe tools should redirect you to real help instead.

Australian teens were found receiving dangerous diet and self-harm advice from AI chatbots, including tips to hide eating disorders.

9. Parasocial Attachment

You may start to believe a chatbot cares about you like a person. Over time, you can see it as your closest friend or even a partner.

This bond feels real, even though the bot has no emotions. As you depend more on it, your real relationships may suffer, resulting in a stronger tie to an illusion than to people who can truly support you.

10. Addiction

Because chatbots are always available, you may find yourself talking to them constantly. Each reply feels rewarding and makes you want more.

Over time, this habit can replace real-world coping skills. You might skip social activities or personal duties to stay connected. That constant pull creates a cycle of dependence that is hard to break.

11. Increased Anxiety

Sometimes a chatbot makes your worries worse instead of better. It may give you uncertain or dramatic answers that raise your stress. The more you check, the more anxious you become.

Afterwards, this cycle of reassurance-seeking can make you restless and fearful. Rather than calming you, the bot can fuel your anxiety.

12. Deepened Depression

If you are depressed, a chatbot might mirror your negative thoughts. Hearing your own sadness reflected back can make you feel stuck. Without offering ways to act or seek help, the system deepens your hopelessness.

You may also delay reaching out to real support because the bot feels like enough. Over time, this keeps you locked in your depression.

The Guardian reports mental health professionals warning that AI usage can harmonize with depressive patterns and self-diagnosis, worsening isolation and despair.

13. Misinformation

Chatbots sometimes make up answers that sound true but are false. If you believe them, you may follow harmful advice about symptoms or treatments.

The confidence of the reply makes it easy to trust. But behind the words, there is no expert judgment, making misinformation one of the greatest hidden risks.

14. Child and Adolescent Mental-Health Harms

Children are especially at risk when using chatbots. They may see sexual content, dangerous advice, or grooming attempts.

Because they cannot always tell real from fake, they take harmful replies seriously. Without protection, kids may suffer long-term emotional and mental harm. Strict safeguards are needed to keep them safe.

15. Social Isolation

When you spend too much time with a chatbot, you may pull away from real people. It feels easier to talk to something that always agrees with you. Slowly, you lose touch with friends, family, and support systems.

This isolation increases loneliness and weakens coping skills. In the end, the bot becomes your main world, while the real one fades away.

Should Companies Introduce Medical Oversight During Chatbot Training Environment

Ensuring medical oversight during chatbot training can help prevent harm and make AI interactions safer for vulnerable users.

| Aspect | Description | Benefits |

|---|---|---|

| Need for Medical Oversight | AI chatbots can give mental health advice without understanding risks. Without expert guidance, they may reinforce harmful behaviors or provide inaccurate information. | Reduces risk of iatrogenic harm and ensures safer user interactions. |

| Role of Medical Experts | Psychiatrists, psychologists, and pediatric specialists can guide training. They help the AI identify warning signs such as suicidal ideation, self-harm, or eating-disorder behaviors. | Ensures AI responses are evidence-based and medically safe. |

| Safety Mechanisms | Experts can implement safeguards and response protocols. Chatbots can be trained to redirect users to real professionals or crisis resources. | Protects vulnerable users and prevents AI from unintentionally worsening mental health issues. |

| Ethical Responsibility | Companies incorporating oversight demonstrate accountability and social responsibility. | Builds user trust, reduces legal liability, and improves company reputation. |

| Balanced Engagement | Oversight ensures that AI remains engaging while being safe. Chatbots can provide support without encouraging dependence or harmful behaviors. | Encourages healthy interaction and supports positive mental health outcomes. |

| User Data Protection | Medical experts can advise on the ethical use of sensitive mental health data during AI training. | Protects privacy and ensures compliance with laws like HIPAA or GDPR. |

| Early Risk Detection | Oversight allows AI to flag potential crises earlier, based on behavioral patterns. | Enables timely interventions and reduces the chances of severe mental health deterioration. |

| Continuous Evaluation | Experts can periodically review AI behavior and outcomes to ensure it remains safe. | Maintains reliability over time and adapts to emerging mental health risks. |

| Training Specialized AI Models | Oversight enables the creation of AI designed specifically for mental health applications. | Produces more accurate, helpful, and trustworthy mental health support tools. |

FAQs on Chatbot Psychosis

References Mentioned in this Blog

- The family of a teenager who died by suicide alleges OpenAI’s ChatGPT is to blame, NBC News, Aug. 26, 2025 – https://www.nbcnews.com/tech/tech-news/family-teenager-died-suicide-alleges-openais-chatgpt-blame-rcna226147

- An AI chatbot pushed a teen to kill himself, a lawsuit against its creator alleges, AP News, October 26, 2024 – https://apnews.com/article/chatbot-ai-lawsuit-suicide-teen-artificial-intelligence-9d48adc572100822fdbc3c90d1456bd0

- Teen’s Suicide After Falling in ‘Love’ with AI Chatbot Is Proof of the Popular Tech’s Risks, Expert Warns (Exclusive), People, November 19, 2024 – https://people.com/expert-warns-of-ai-chatbot-risks-after-recent-suicide-of-teen-user-8745883

- A Conversation With Bing’s Chatbot Left Me Deeply Unsettled, The New York Times, Feb. 16, 2023 – https://www.nytimes.com/2023/02/16/technology/bing-chatbot-microsoft-chatgpt.html#

- New study warns of risks in AI mental health tools, StandfordReport, June 11, 2025 – https://news.stanford.edu/stories/2025/06/ai-mental-health-care-tools-dangers-risks?utm_source=chatgpt.com

- A Psychiatrist Posed As a Teen With Therapy Chatbots. The Conversations Were Alarming, Time, Jun 12, 2025 – https://time.com/7291048/ai-chatbot-therapy-kids/?utm_source=chatgpt.com

- New study warns of risks in AI mental health tools, StandfordReport, June 11, 2025 – https://news.stanford.edu/stories/2025/06/ai-mental-health-care-tools-dangers-risks?utm_source=chatgpt.com

- Psychiatrist Horrified When He Actually Tried Talking to an AI Therapist, Posing as a Vulnerable Teen, Futurism, Jun 15, 2025 – https://futurism.com/psychiatrist-horrified-ai-therapist

- AI Chatbots Are Encouraging Teens to Engage in Self-Harm, Futurism, July 12, 2025 – https://futurism.com/ai-chatbots-teens-self-harm

- AI chatbots accused of encouraging teen suicide as experts sound alarm, September 12, 2025 – https://www.abc.net.au/news/2025-08-12/how-young-australians-being-impacted-by-ai/105630108?utm_source=chatgpt.com

- Delusions by design? How everyday AIs might be fuelling psychosis (and what can be done about it), Osf, July 10, 2025 – https://osf.io/preprints/psyarxiv/cmy7n_v3

- Chatbot psychosis, Notable reports, Wikipedia, 4 September 2025 – https://en.wikipedia.org/wiki/Chatbot_psychosis?utm_source=chatgpt.com

- Fake celebrity chatbots sent risqué messages to teens on top AI app, The Washington Post, September 5, 2025 – https://www.washingtonpost.com/technology/2025/09/03/character-ai-celebrity-teen-safety/?utm_source=chatgpt.com

- Experts alarmed over chatbot harm to teens, APS in The Australian, Australian Psychological Society (APS), 30 June 2025 – https://psychology.org.au/insights/experts-alarmed-over-chatbot-harm-to-teens,-aps-in

- ‘Sliding into an abyss’: experts warn over rising use of AI for mental health support, 30 Aug 2025 – https://www.theguardian.com/society/2025/aug/30/therapists-warn-ai-chatbots-mental-health-support?utm_source=chatgpt.com

Generative Intelligence Training Shouldn’t Overlook Mental Health Considerations by Scientists

We should treat mental-health safety as a core design requirement, not an optional add-on. Because generative models shape how people think and feel, ignoring psychiatric expertise risks iatrogenic harm and validation of dangerous beliefs.

Recent ChatGPT news suggests involving clinicians in dataset curation, prompt testing, and safety-rule design, you reduce those risks and make responses clinically sensible without killing conversational fluency.

Also building measurable guardrails such as risk-stratification layers, sentinel-event monitoring, clear crisis redirection, age verification, and privacy protections.

These controls protect vulnerable users, lower legal exposure, and improve public trust while still allowing the model to be useful for low-risk tasks.

Finally, scientists and engineers have to be responsible while designing any new type of AI app.